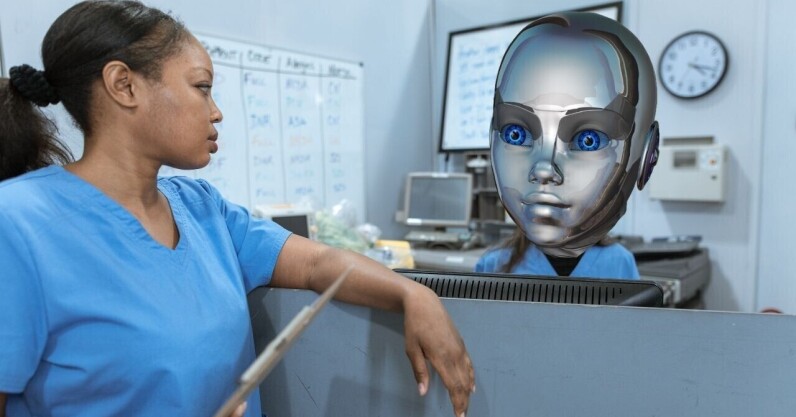

Scientists fear using AI models such as ChatGPT in healthcare will exacerbate inequalities. The epidemiologists, from the universities of Cambridge and Leicester, warn that large language models (LLMs) could entrench inequities for ethnic minorities and lower-income countries. Their concern stems from systemic data biases. AI models used in healthcare are trained on information from websites and scientific literature. But evidence shows that ethnicity data is often missing from these sources. As a result, AI tools can be less accurate for underrepresented groups. This can lead to ineffective drug recommendations or racist medical advice. “It is widely accepted that a differential…

This story continues at The Next Web